Regimes of Surveillance

When we initiated the project, a goal was to explore the links between contemporary and colonial surveillance regimes. The photobooth could, as it were, function as a time machine connecting a contemporary museum visitor with person in another country and a century earlier through not only the act of being photographed, but also by the particular parallel “machines” behind the photography aiming to use classification as a means of reinforcing certain idea of the world, and related power structures.

Your algorithm is racist!

Algorithms of Oppression

Author Safiya Umoja Noble describes how in the process of initiating the research that would become her book (Algorithms of Opression) that she met a large amount of ingrained resistance in the academic world to the idea of algorithms containing bias.

On a fundamental level, there is a need to understand how the sciences, as a human endeavor, are constructed (not given) and naturally reflect the biases of their social situations.

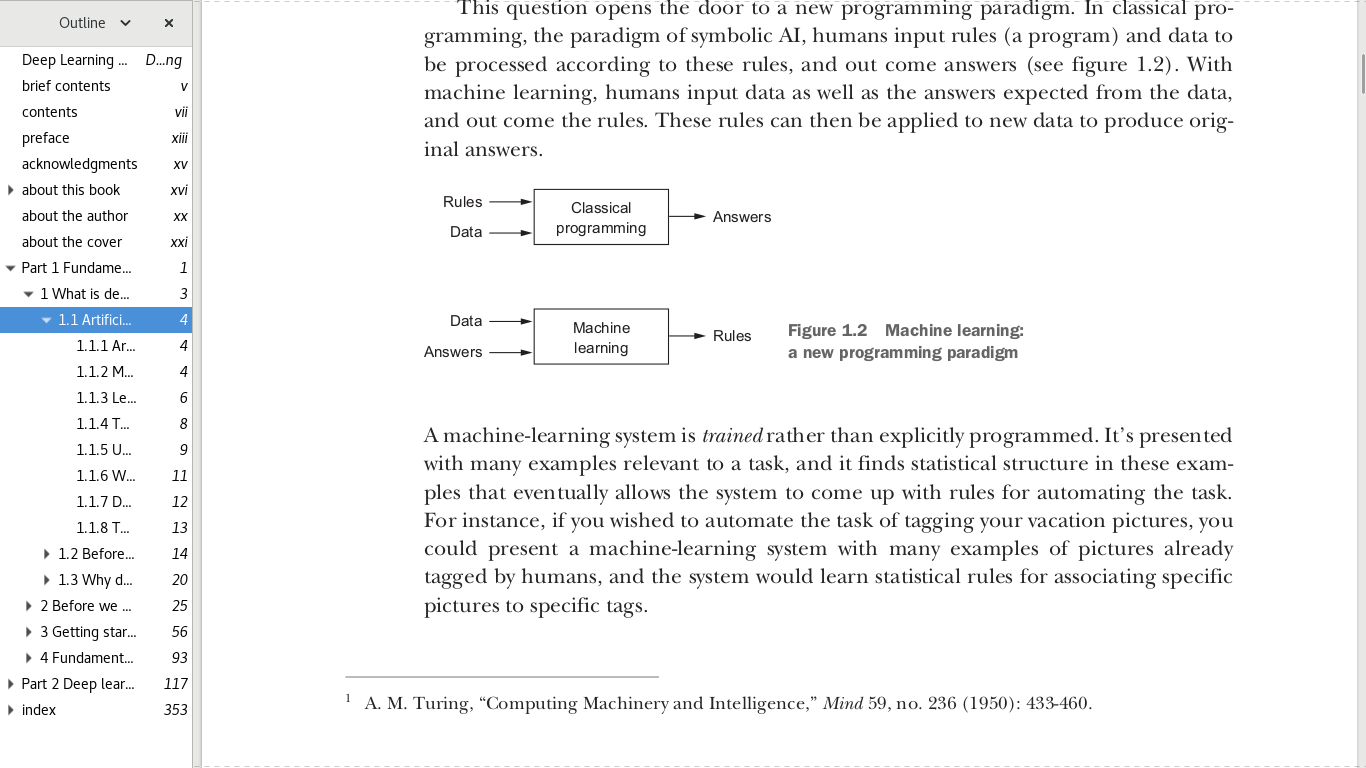

But more profounding, many contemporary techniques, such as thouse around facial recognition and computer vision, are techniques based on machine learning that are fundamentally based on statistical models derived from the analysis of “labelled datasets”.

Images as data

Face Algorithms

- Face Recognition

- Face Classification

- Face Detection

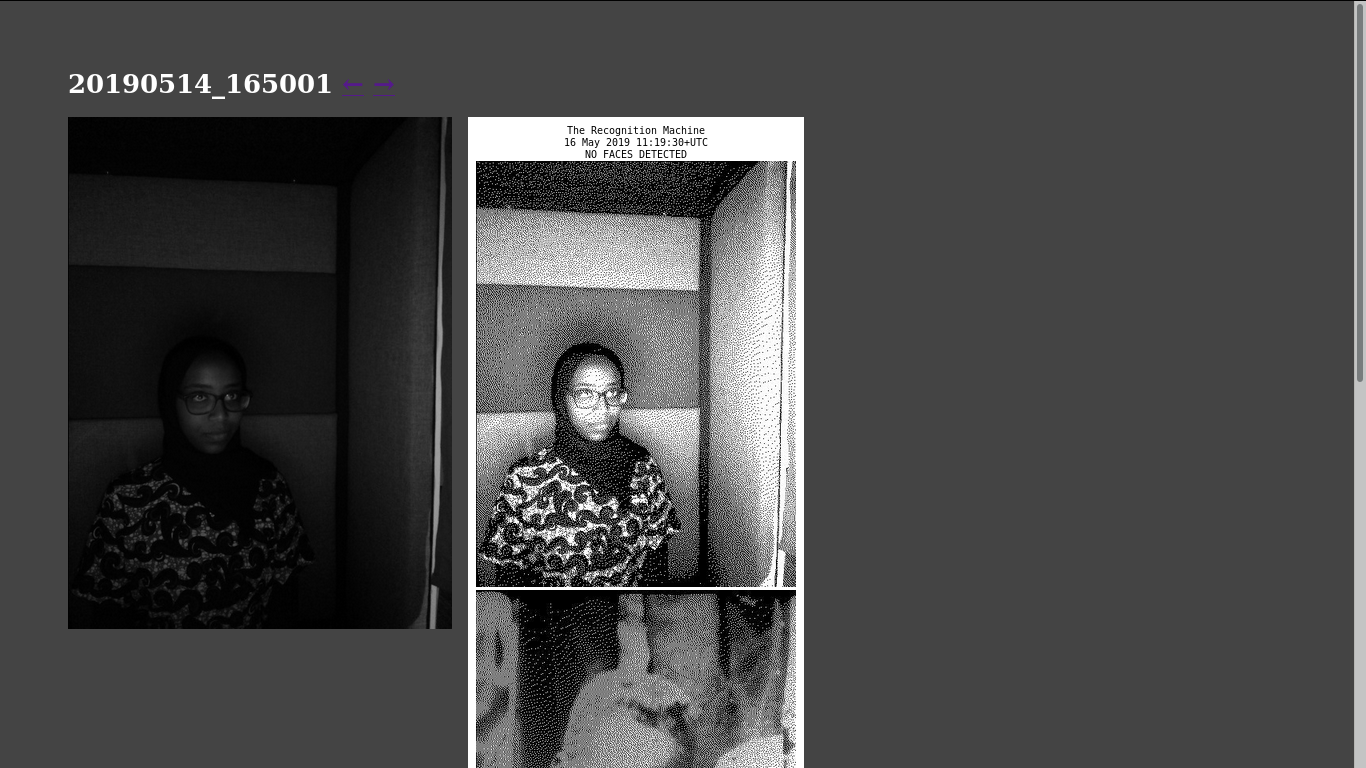

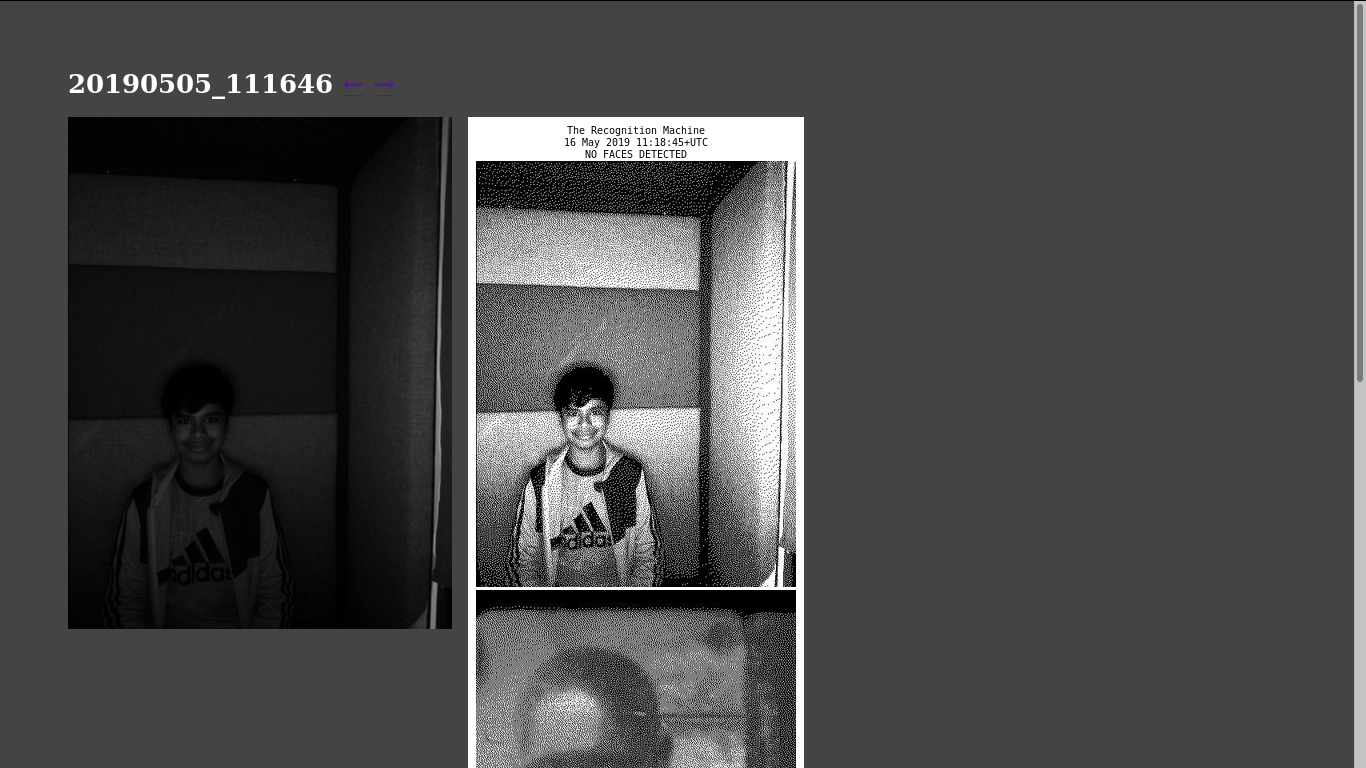

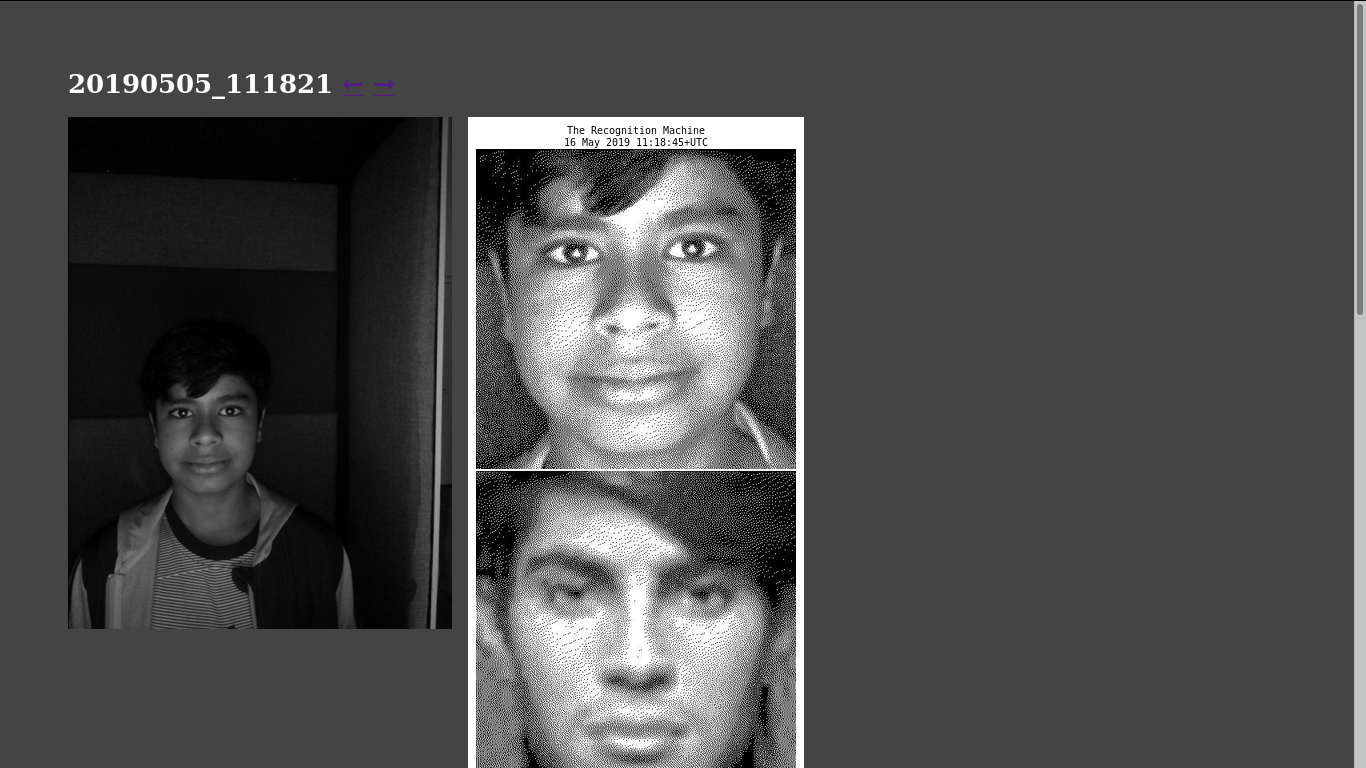

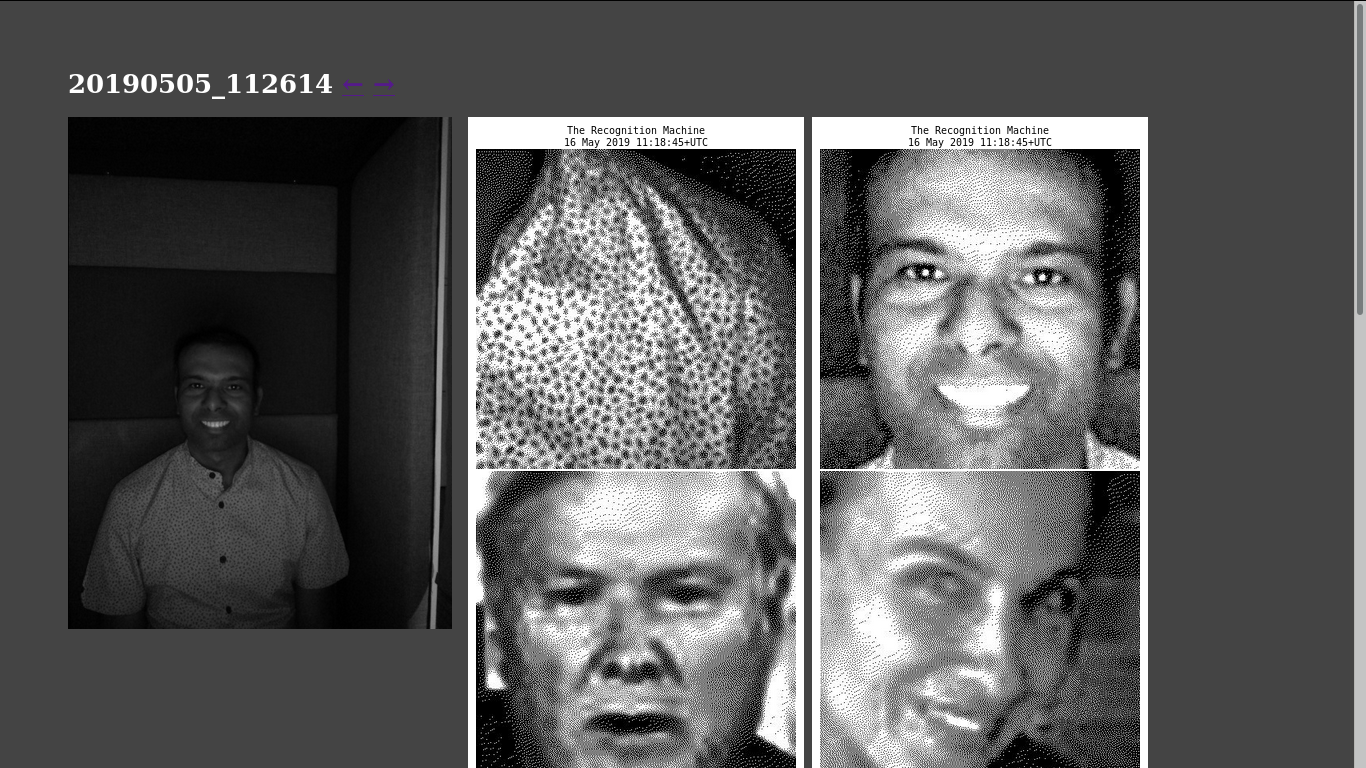

Joy Buolamwini: The coded gaze

Face detection and classification algorithms are also used by US-based law enforcement for surveillance and crime prevention purposes. In “The Perpetual Lineup”, Garvie and colleagues provide an in-depth analysis of the unregulated police use of face recognition and call for rigorous standards of automated facial analysis, racial ac- curacy testing, and regularly informing the pub- lic about the use of such technology (Garvie et al., 2016). Past research has also shown that the accuracies of face recognition systems used by US-based law enforcement are systematically lower for people labeled female, Black, or be- tween the ages of 18—30 than for other demo- graphic cohorts (Klare et al., 2012). The latest gender classification report from the National In- stitute for Standards and Technology (NIST) also shows that algorithms NIST evaluated performed worse for female-labeled faces than male-labeled faces (Ngan et al., 2015).

Buolamwini, J., Gebru, T. “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of Machine Learning Research 81:1-15, 2018 Conference on Fairness, Accountability, and Transparency

AJL: http://gendershades.org/

Modern-day Phrenology

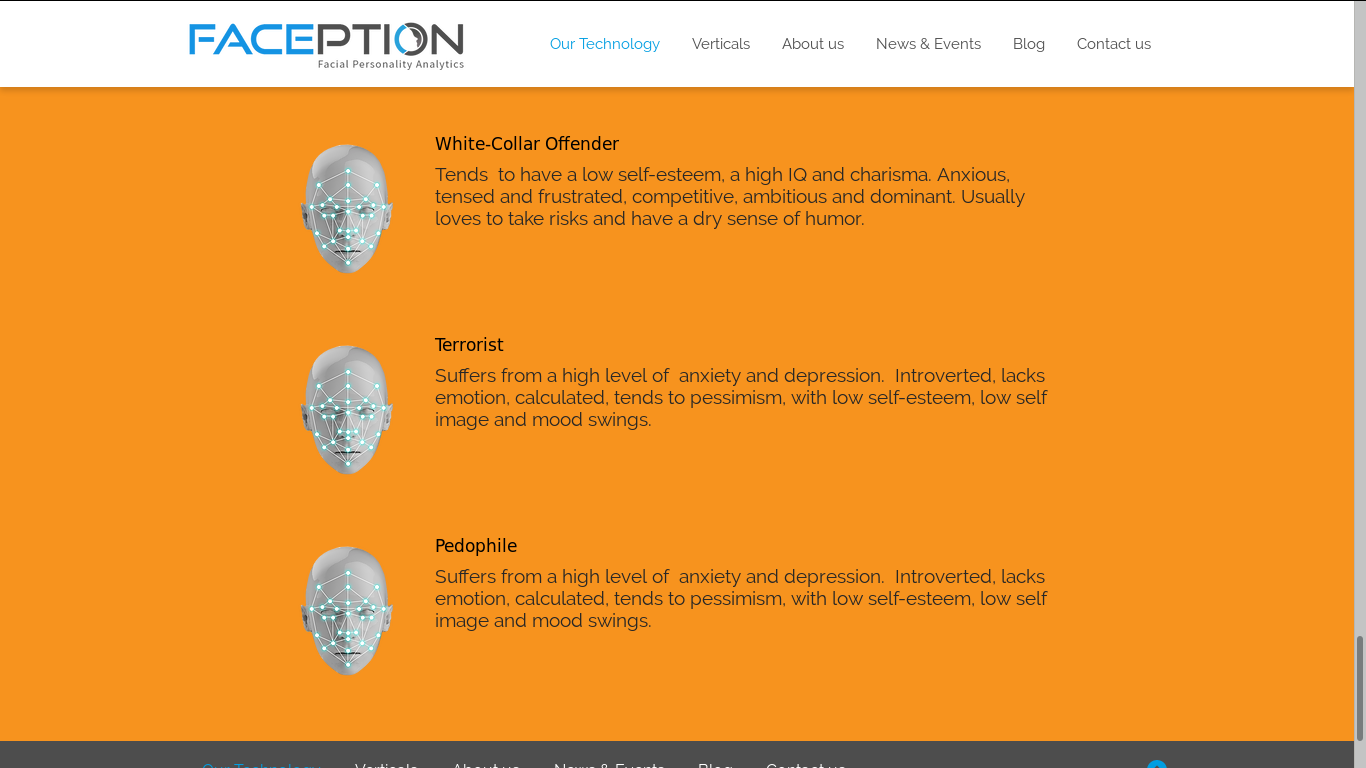

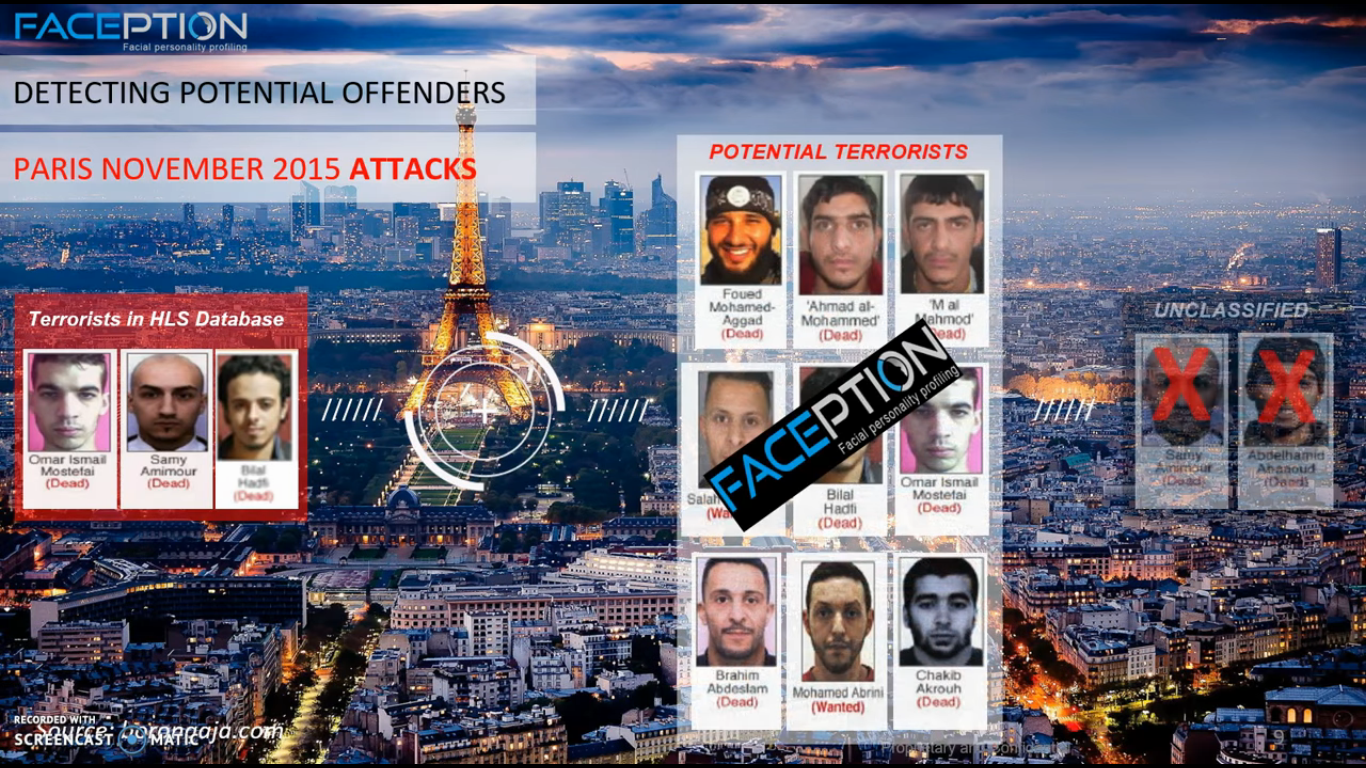

The case of Faception

Notes

- Vicious cycles: the compelling power (and sinister circularity) of “showing that it works”

(Struggling to develop an idea of the magician’s trick of setting the stage and then making magic – but which relies on the fact that you’ve placed your audience in a frame where the result is made to be seen as magical.)

- Quackery / Pseudo-Scientific processes

- Normalizing